In the rapidly evolving world of technology, it’s essential to stay updated with the latest terms and concepts. One such term that has gained immense popularity in recent times is “Edge.” However, not everyone is aware of what edge means in technology. In this article, we will explain the meaning of edge and its significance in technology.

What is Edge in Technology?

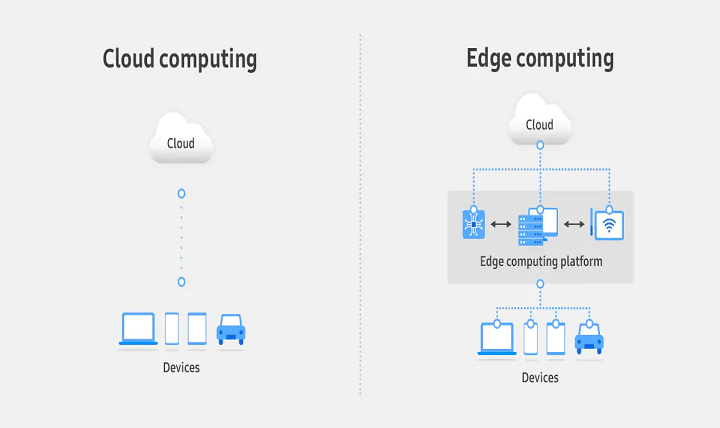

Edge refers to the computational power and data storage that exists at the edge of a network. In simple terms, it’s the infrastructure that lies between the devices and the cloud. The edge comprises all the devices that collect data from the environment and process it locally, without sending it to the cloud for analysis.

Edge computing allows devices to operate independently, even without an active internet connection. It helps in reducing latency, increasing data security and privacy, and improving the overall performance of the system.

Significance of Edge Computing

Edge computing is becoming increasingly crucial in the era of the Internet of Things (IoT), where millions of devices are connected to the internet. The traditional cloud computing model is not sufficient to handle the massive amount of data generated by these devices. Edge computing provides a distributed architecture that enables the processing of data closer to its source, reducing the load on the central cloud.

Edge computing also helps in enhancing the overall user experience by providing real-time data processing capabilities. For instance, consider a self-driving car that relies on multiple sensors to make decisions. With edge computing, the car can process the sensor data in real-time, allowing for quicker decision-making and a safer driving experience.

Another advantage of edge computing is improved data security and privacy. By processing data locally, edge devices can reduce the risk of data breaches and keep sensitive information secure. Edge computing also enables faster response times in critical situations, such as medical emergencies, where every second counts.

Conclusion

Edge computing is rapidly transforming the world of technology and enabling new use cases that were not possible with traditional cloud computing. It provides a distributed architecture that enables devices to process data locally, reducing latency, improving performance, and enhancing data security and privacy.

Understanding the meaning of edge is crucial for anyone working in the technology industry. As more and more devices become connected to the internet, edge computing will continue to play a vital role in enabling new use cases and driving innovation.